Inference-Time Optimizations

Inference-time optimizations are powerful techniques that can significantly enhance the performance of your LLM applications without the need for model fine-tuning.

This guide will explore two key strategies implemented as variant types in TensorZero: Best-of-N (BoN) sampling and Dynamic In-Context Learning (DICL). Best-of-N sampling generates multiple response candidates and selects the best one using an evaluator model, while Dynamic In-Context Learning enhances context by incorporating relevant historical examples into the prompt. Both techniques can lead to improved response quality and consistency in your LLM applications.

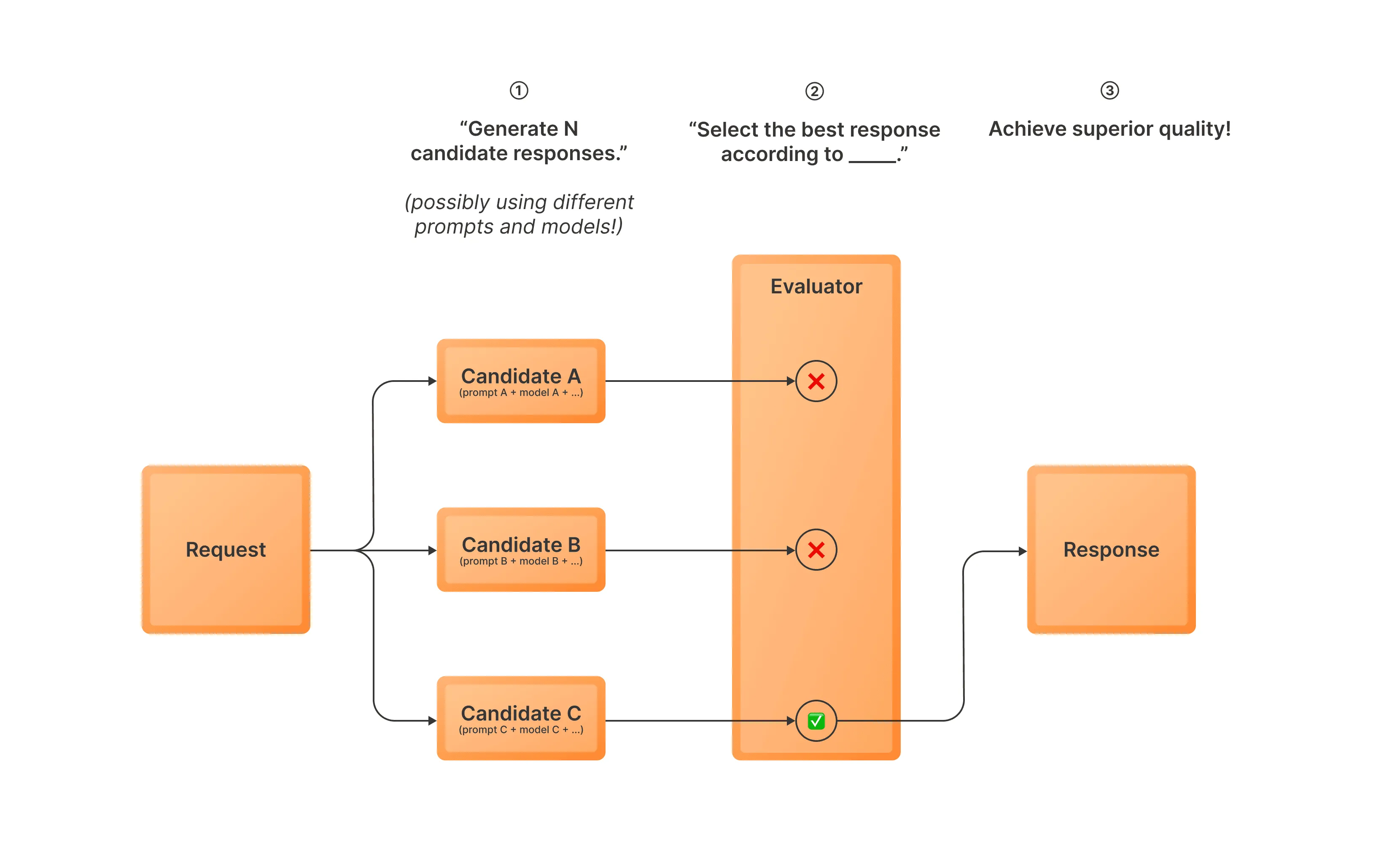

Best-of-N Sampling

Best-of-N (BoN) sampling is an inference-time optimization strategy that can significantly improve the quality of your LLM outputs. Here’s how it works:

- Generate multiple response candidates using one or more variants (i.e. possibly using different models and prompts)

- Use an evaluator model to select the best response from these candidates

- Return the selected response as the final output

This approach allows you to leverage multiple prompts or variants to increase the likelihood of getting a high-quality response. It’s particularly useful when you want to benefit from an ensemble of variants or reduce the impact of occasional bad generations. Best-of-N sampling is also commonly referred to as rejection sampling in some contexts.

To use BoN sampling in TensorZero, you need to configure a variant with the experimental_best_of_n type.

Here’s a simple example configuration:

[functions.draft_email.variants.promptA]type = "chat_completion"model = "gpt-4o-mini"user_template = "functions/draft_email/promptA/user.minijinja"

[functions.draft_email.variants.promptB]type = "chat_completion"model = "gpt-4o-mini"user_template = "functions/draft_email/promptB/user.minijinja"

[functions.draft_email.variants.best_of_n]type = "experimental_best_of_n"candidates = ["promptA", "promptA", "promptB"]weight = 1

[functions.draft_email.variants.best_of_n.evaluator]model = "gpt-4o-mini"user_template = "functions/draft_email/best_of_n/user.minijinja"In this configuration:

- We define a

best_of_nvariant that uses two different variants (promptAandpromptB) to generate candidates. It generates two candidates usingpromptAand one candidate usingpromptB. - The

evaluatorblock specifies the model and instructions for selecting the best response.

Read more about the experimental_best_of_n variant type in Configuration Reference.

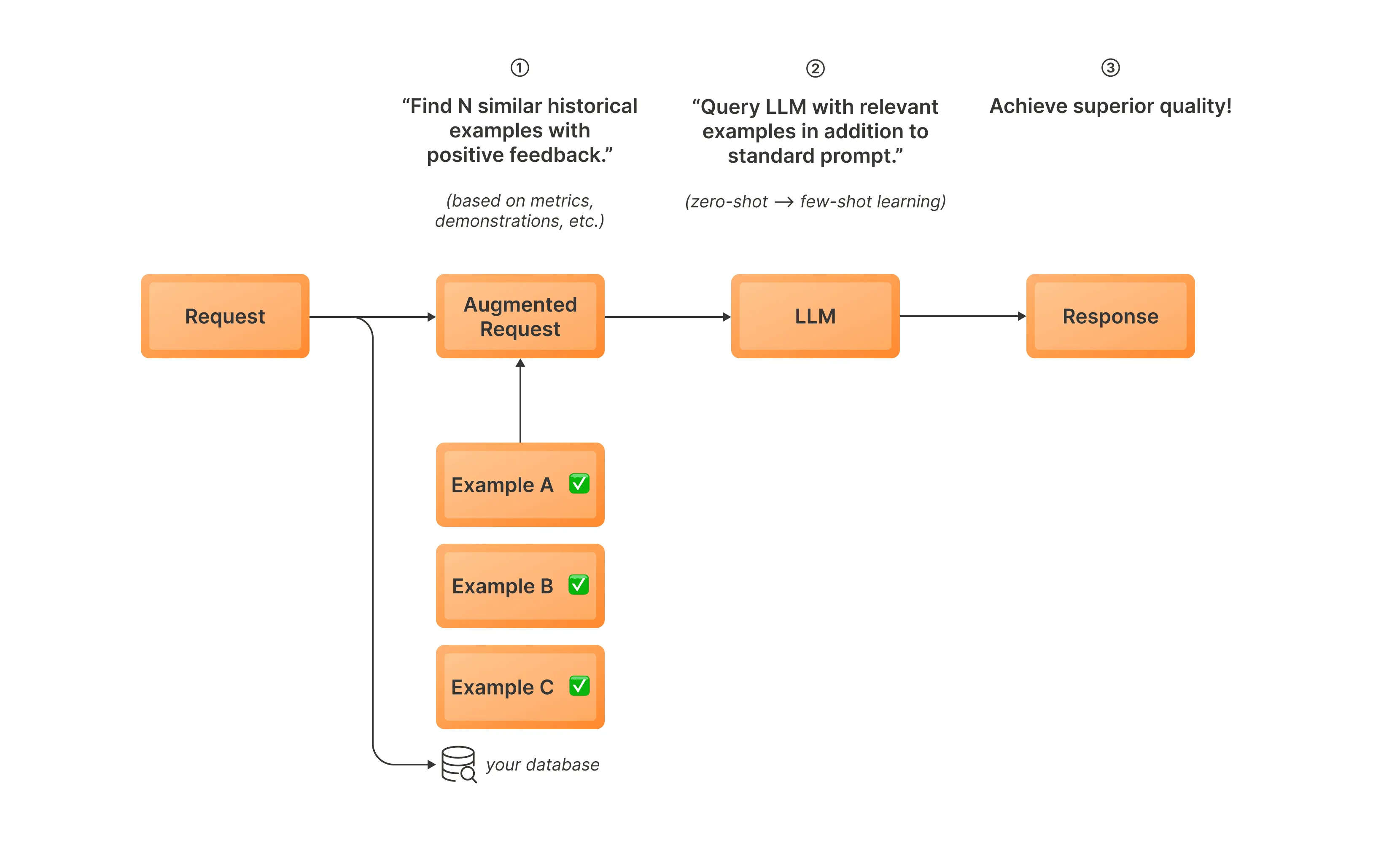

Dynamic In-Context Learning (DICL)

Dynamic In-Context Learning (DICL) is an inference-time optimization strategy that enhances LLM performance by incorporating relevant historical examples into the prompt. This technique leverages a database of past interactions to select and include contextually similar examples in the current prompt, allowing the model to adapt to specific tasks or domains without requiring fine-tuning. By dynamically augmenting the input with relevant historical data, DICL enables the LLM to make more informed and accurate responses, effectively learning from past experiences in real-time.

Here’s how it works:

- Before inference: Curate reference examples, embed them, and store in the database

- Embed the current input using an embedding model and retrieve similar high-quality examples from a database of past interactions

- Incorporate these examples into the prompt to provide additional context

- Generate a response using the enhanced prompt

To use DICL in TensorZero, you need to configure a variant with the experimental_dynamic_in_context_learning type.

Here’s a simple example configuration:

[functions.draft_email.variants.dicl]type = "experimental_dynamic_in_context_learning"model = "gpt-4o-mini"embedding_model = "text-embedding-3-small"system_instructions = "functions/draft_email/dicl/system.txt"k = 5

[embedding_models.text-embedding-3-small]routing = ["openai"]

[embedding_models.text-embedding-3-small.providers.openai]type = "openai"model_name = "text-embedding-3-small"In this configuration:

- We define a

diclvariant that uses theexperimental_dynamic_in_context_learningtype. - The

embedding_modelfield specifies the model used to embed inputs for similarity search. We also need to define this model in theembedding_modelssection. - The

kparameter determines the number of similar examples to retrieve and incorporate into the prompt.

To use Dynamic In-Context Learning (DICL), you also need to add relevant examples to the DynamicInContextLearningExample table in your ClickHouse database.

These examples will be used by the DICL variant to enhance the context of your prompts at inference time.

The process of adding these examples to the database is crucial for DICL to function properly. We provide a sample recipe that simplifies this process: Dynamic In-Context Learning with OpenAI.

This recipe supports selecting examples based on boolean metrics, float metrics, and demonstrations.

It helps you populate the DynamicInContextLearningExample table with high-quality, relevant examples from your historical data.

For more information on the DynamicInContextLearningExample table and its role in the TensorZero data model, see Data Model.

For a comprehensive list of configuration options for the experimental_dynamic_in_context_learning variant type, see Configuration Reference.

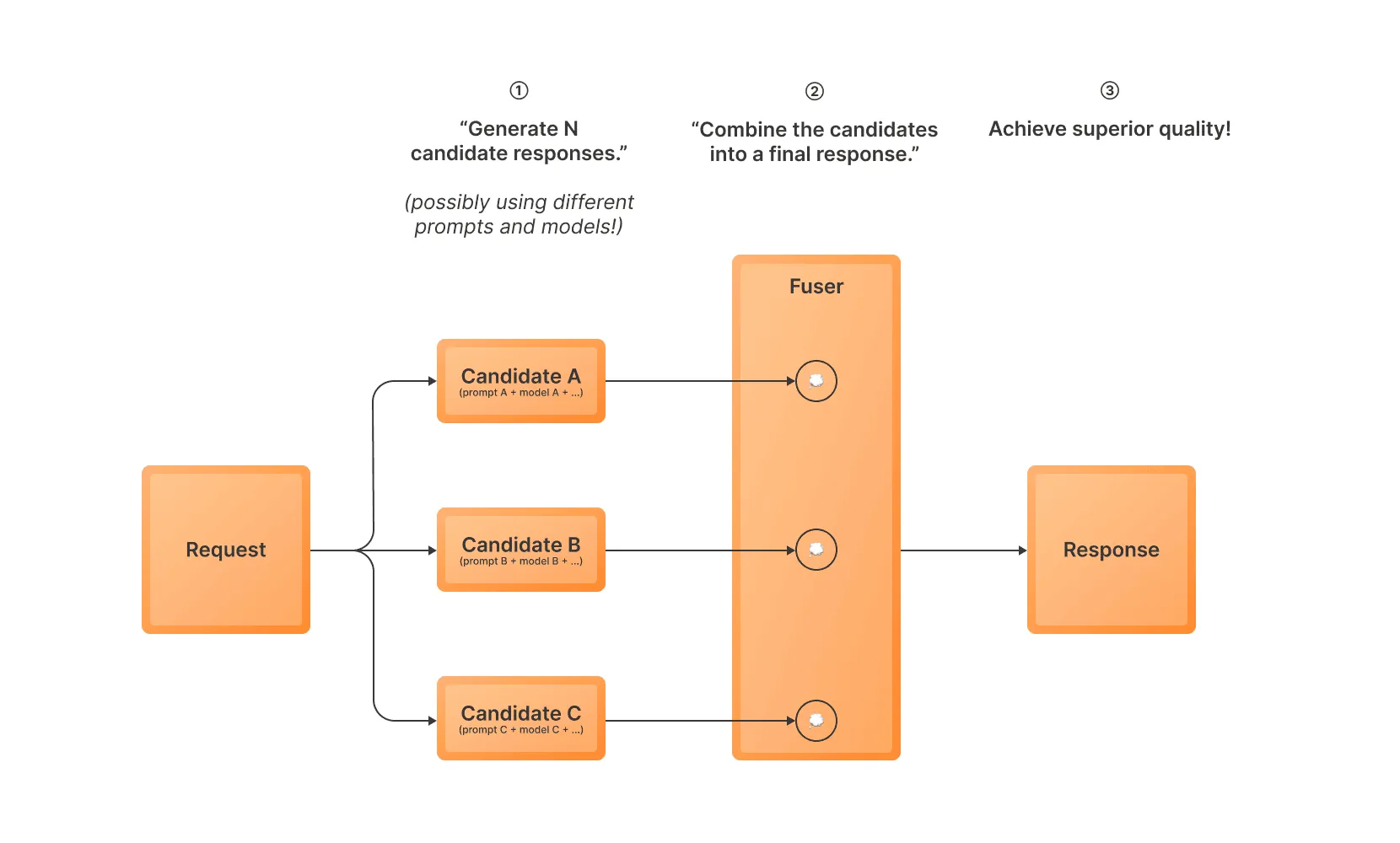

Mixture-of-N Sampling

Mixture-of-N (MoN) sampling is an inference-time optimization strategy that can significantly improve the quality of your LLM outputs. Here’s how it works:

- Generate multiple response candidates using one or more variants (i.e. possibly using different models and prompts)

- Use a fuser model to combine the candidates into a single response

- Return the combined response as the final output

This approach allows you to leverage multiple prompts or variants to increase the likelihood of getting a high-quality response. It’s particularly useful when you want to benefit from an ensemble of variants or reduce the impact of occasional bad generations.

To use MoN sampling in TensorZero, you need to configure a variant with the experimental_mixture_of_n type.

Here’s a simple example configuration:

[functions.draft_email.variants.promptA]type = "chat_completion"model = "gpt-4o-mini"user_template = "functions/draft_email/promptA/user.minijinja"weight = 0

[functions.draft_email.variants.promptB]type = "chat_completion"model = "gpt-4o-mini"user_template = "functions/draft_email/promptB/user.minijinja"weight = 0

[functions.draft_email.variants.mixture_of_n]type = "experimental_mixture_of_n"candidates = ["promptA", "promptA", "promptB"]weight = 1

[functions.draft_email.variants.mixture_of_n.fuser]model = "gpt-4o-mini"user_template = "functions/draft_email/mixture_of_n/user.minijinja"In this configuration:

- We define a

mixture_of_nvariant that uses two different variants (promptAandpromptB) to generate candidates. It generates two candidates usingpromptAand one candidate usingpromptB. - The

fuserblock specifies the model and instructions for combining the candidates into a single response.

Read more about the experimental_mixture_of_n variant type in Configuration Reference.