Case Study: Automating Code Changelogs at a Large Bank with LLMs

Summary

One of the largest European banks used 10.3KTensorZero to build an LLM-powered changelog generator for GitLab merge requests. They optimized model quality, deployed fully on-premise, and iterated quickly — without needing a team of ML engineers.

Overview

Mark is a DevOps engineer at one of Europe’s largest banks, managing internal tooling for a massive codebase. Engineers in the organization were required to write detailed changelogs for every GitLab merge request (similar to a GitHub pull request) to improve long-term maintainability. The process was time-consuming and frustrating for the engineering team: writing comprehensive changelogs required significant effort, especially given the complexity of their enterprise codebase. In practice, most changelogs were skipped or incomplete.

Mark wondered… Could LLMs automate this workflow and do a better job?

The Problem

Off-the-shelf LLMs didn’t perform well.

Models couldn’t follow the organization’s specific changelog format, often producing unstructured or inconsistent outputs. They lacked context about the large, complex codebase and generated superficial or inaccurate summaries that missed the true scope of changes. Localization was another blocker: changelogs needed to be written in a local non-English language, which the models struggled with.

Strict compliance rules required that all data remain on-premise, but most LLM tools were cloud-only. It was difficult to iterate: challenges included monitoring performance, comparing versions, and tracking regressions. The team had deep expertise in infrastructure and DevOps, but not in machine learning. They needed a solution that was self-hosted, easy to customize, and simple to operate without ML expertise.

The Solution

Mark and his team adopted TensorZero to build and operate their changelog automation system. Within days, the team had integrated it into their existing CI/CD pipelines, connecting directly to GitLab merge requests.

TensorZero provided a clean abstraction over model inference, prompt configuration, observability, A/B testing, structured generation, and more. The best part? TensorZero is fully open source.

As engineers edited and approved the AI-generated changelogs, those human revisions were automatically used by TensorZero to optimize future inference requests.

Mark and his team leveraged TensorZero’s built-in dynamic in-context learning feature to make the system get smarter over time — without fine-tuning, labeling datasets, or writing complex pipelines. It was a human-in-the-loop learning flywheel, out of the box.

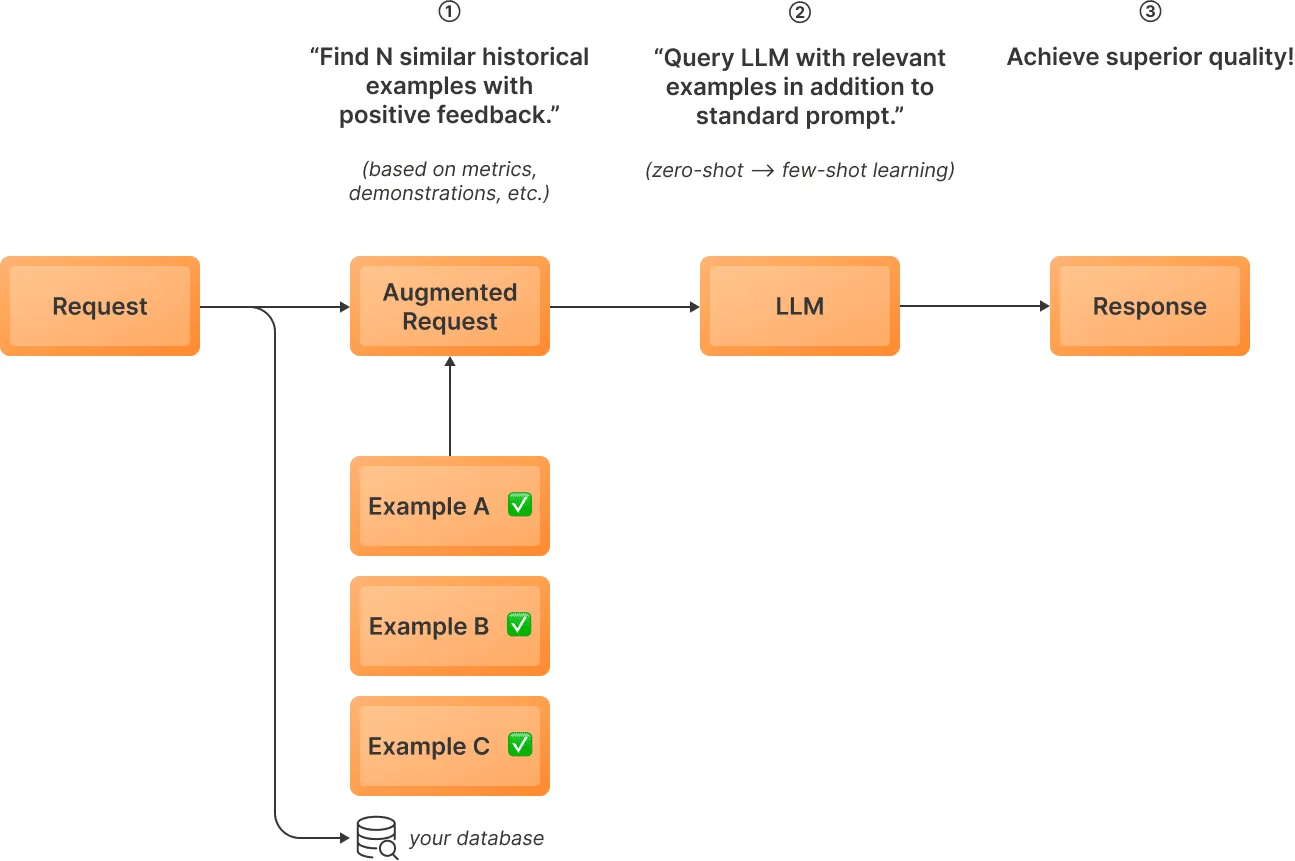

What is Dynamic In-Context Learning (DICL)?

Dynamic In-Context Learning (DICL) is an inference-time optimization strategy that enhances LLM performance by incorporating relevant historical examples into the prompt.

This technique leverages a database of past interactions to select and include contextually similar examples in the current prompt, allowing the model to adapt to specific tasks or domains without requiring fine-tuning. By dynamically augmenting the input with relevant historical data, DICL enables the LLM to make more informed and accurate responses, effectively learning from past experiences in real-time.

Here’s how it works:

- Before inference: Curate reference examples, embed them, and store in the database

- Embed the current input using an embedding model and retrieve similar high-quality examples from a database of past interactions

- Incorporate these examples into the prompt to provide additional context

- Generate a response using the enhanced prompt

TensorZero has built-in support for inference-time optimizations like dynamic in-context learning. Learn more →

By combining TensorZero with Ollama for model serving, the team managed to build a fully self-hosted service. Data never leaves their infrastructure.

The Result

With TensorZero, the team delivered a production-grade LLM system that met enterprise requirements without compromising speed, privacy, or control.

- ↑ Model Quality: TensorZero’s built-in inference-time optimizations made the system improve continuously — learning from engineer edits and approvals to generate better changelogs over time.

- ↑ Data Privacy: TensorZero enabled a fully self-hosted deployment. All components ran inside the organization’s infrastructure. No data ever left their cloud.

- ↑ Engineering Velocity: The DevOps team shipped an enterprise-grade LLM application without ML engineers thanks to TensorZero’s powerful end-to-end LLMOps platform.

Their engineers can now focus on building great software instead of spending time manually writing changelogs.